WEARABLES ARE COMING – WHAT TO DO WITH YOUR PHONE TIL THEN

6 June 2018

The future Apple is working toward isn’t one we’ll be looking at through our phone screens. This is a direct quote from an interesting Wired-article, pointing to – amongst other things – Apple´s project T288.

It is yet unsure when and how we all will consume and produce content solely with glasses on our nose but what we are seeing these days is a pretty fast iteration cycle based development towards necessary software. As Matt Miesnieks says: You can’t wait for headsets and then quickly do 10 years' worth of R&D on the software.

While we are waiting for the super smart glasses from space let´s take some minutes to rethink the phone before it finds its place in the museum of technical gadgets. I made up my mind for a presentation at this years international #Mojofest in Galway, Ireland. Here you will find a couple of slides and links from my presentation.

For years i have compared the smartphones capability to a decent swiss army knife. It is almost always at hand. It is a good problem solver. An allrounder. There is better stuff out there if you need proper tools. But it is good enough and even better at a lot of thinks.

We need a new metaphor. Otherwise we miss all the new things our phones can do. And before we all go totally crazy with not yet existing smart spectacles we better get to know our new miraculous machines that are more like sci-fi lightsabres than err phones.

There are three things i want to focus on. 1) Our phones can see now. We need to talk more about computer vision. 2) Our phones gets better and better at understanding things. We need to make use of its machine learning more. 3) Layered storytelling will be everything, everywhere. All the time. Fotos and Videos are just a basic ground layer, enhanced with data.

This is how we imagined machines to look at us humans. Terminator style. You see the numbers? Sure you do. They stand for: A machine is looking at us. Pretty silly, right.

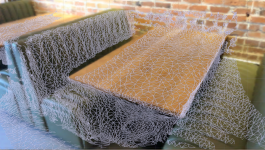

This is a short glimpse into a video from 2015. Presenting Google´s Tango project. An if you think about dancing when hearing tango. No worries. Tango was shut down by the end of 2017. But topics like 3D scanning and accurate indoor positioning are alive. And they affect or will affect your daily life as a journalist or content producer.

You only look once (YOLO) is a state-of-the-art, real-time object detection system. Pretty impressive, right.

The idea behind 'Open Data Cam’ was to create a free, easy to use platform for detecting objects in urban settings. Creating data through real time detections can change the way we make decisions and perceive our urban surroundings. With these tools at hand, it’s up to you what you want to quantify.

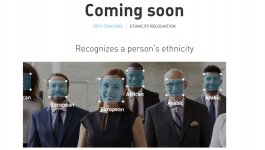

Do we really want to do this? A new ‘ethnicity recognition’ tool is just automated racial profiling.

Typical lobby of a Parisian building. Scanned with Tango Constructor + Lenovo Phab2pro.

6D.ai is creating a real-time 3D map of the world.

Here are the links circling around „Whats next for 360/VR/AR?“ focusing on the smartphone as a tool for content creators. And here is my first and last Twitter Moment documenting the panel.