SPATIAL COMPUTING

9 December 2019

My main field of interest 2019 – yet again – all things concerning the field of mixing various forms of digital and analog realities. I try to list, sum up and somewhat structure some of my findings here.

AR in 2019 feels like doing mobile phone work in 2002-2003

There were a bunch of tweets with a similar connotation. Part of the ongoing discussion: When will we all finally enter the new stage of computation, leaving smartphones behind, wearing super comfortable glasses with magic like capabilities. The answer is clear and unclear. As always. It will taker longer than expected. Probably two years or ten years or longer. Depending on who you ask or follow on Twitter. At the same time it will all just happen so fast. Think about Face Filters, Airpods, Google AR Navigation, Ikea AR, etc. As always: The future is already there. It is just unevenly distributed. And you might get dizzy on the way, lose track or start looking for the next bright shiny object. Beware young Padawan.

We do not know the simplicity & certainty of a physical identity alone determining our existence

The actual digital paradigm refers to a phygital existence - a word that i learned this year and that i do not like very much: a combination of the virtual and the real, physical and digital experiences determining our subjective reality. This is part of the broader context. This is what makes it hard to understand the enormous consequences of the spatial computing shift when you come from a background that is more or less analog only.

Our simultaneous existence in physical and digital space

We were happy when we could google information in the late 90s and we were happy when we started to carry around our computers in the early 10s. But now these computers more and more understand the surroundings and can enhance them. Information in situ. Always there where and when you need it. So much to try out now. So much to learn. Key question on our way: Does this or that application add value to the user?

"Facebook, Google, Apple, Microsoft: building up spatial details of the world". All big tech companies are obviously working in that area. And we are talking about "a computing modality rather than a display modality".

It’s been called the AR Cloud by many, the Magicverse by Magic Leap, the Mirrorworld by Wired, the Cyberverse by Huawei, Planet-scale AR by Niantic and Spatial Computing by academics

In his very long Medium post Andy Polaine thankfully sums up what is possible right now in the age of synthetic realities – because Spatial Computing is of course fueled by AI and others. Andy writes: Let´s recap. We can now: Synthesise people, faces, objects, animals, Capture and re-generate poses and movement, Modify facial expressions, Synthesise places and landscapes, Clone voices, Generate completely new imagery, Generate writing, Effortlessly erase anything from images/video, Generate GUIs and UX flows, Link them all together in design workflows.

So what has been practically worked on in 2019. What kind of results, test and experiments are out there? And what do all these results teach us? The field is vast as you can see in every single monthly write up by Tom Emerich: AR Roundup. I will pick out a few in areas like journalism, art und visual experiments.

AR and VR will make spatial journalism the future of reporting

The New York Times has been playing around with AR. I liked the pollution dots. They add value. And they are seamlessly included in the app. Adds perfectly up to everything the NYT has been trying in the field for month and years now. Here is another one: You can stand where Neil Armstrong and Buzz Aldrin were standing. And a german version of the moon landing.

The Knight Lab has worked on some AR prototypes recently. While the results are certainly not overwhelming i like the documentation of the process and the usage of tools like Torch. And while the Video for the New Yorker AR cartoon app is hilarious and great, the app itself is not very much fun.

Storytelling in AR is Like Writing in 3D

But instead of talking about how AR will democratize Hollywood production skills the same way IG democratized professional photography or Voice Driven AR combining spatial storytelling & grains of interactivity i´d rather talk about vanishing. Something that some people have been very keen on achieving in AR this year.

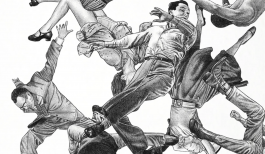

Nerds re-invent camouflage

It somewhat started in April: Psychedelically glitch the hell out of them. Because if you can detect all these people you should get rid of them as well, right?! In June someone else started erasing with ARKit3. In June Zach Lieberman did a very good attempt in hiding. In July Kitasenju tried some decent optical camouflage.

A camera app that automatically removes all people

But why do you want to hide and seek when you can use the AR Beauty Try-On, try on shoes with your smartphone or solve Brexit. You can shop AR clothes. Or show your animated portfolio on a T-Shirt.

Fashion is a filter

The world is a strange place. And while we might get a browser for reality soon, i think the most intriguing examples of spatial storytelling leave known spaces behind. The new narratives inside our latest hybrid GAN model with an experimental browser are beautiful. And so are the next level trips. We can travel back in time, while moving away, compose reality and/or add happy things.

We need new lands, we need new landscapes, together with new cities, we need new structure

This is probably why all huge tech companies cling to artists in order to shape, test or sell AR right now. See e.g. Adobe, Facebook, Google. This actually needs some further investigation. I have a hell lot of links reserved for another article. Beware. Before we come to a grinding hold. Two things are obvious now. Face Filters became some sort of AR killer app as written here in January. And my only viral tweet ever does certainly not show any kind of future. At least not literally. On a metaphorical level i am pretty sure that we are all trapped inside a green room trudging helplessly on imagined wet grass.